Addition

Addition (usually signified by the plus symbol +) is one of the four basic operations of arithmetic, the other three being subtraction, multiplication and division.[2] The addition of two whole numbers results in the total amount or sum of those values combined. The example in the adjacent image shows two columns of three apples and two apples each, totaling at five apples. This observation is equivalent to the mathematical expression "3 + 2 = 5" (that is, "3 plus 2 is equal to 5").

Besides counting items, addition can also be defined and executed without referring to concrete objects, using abstractions called numbers instead, such as integers, real numbers and complex numbers. Addition belongs to arithmetic, a branch of mathematics. In algebra, another area of mathematics, addition can also be performed on abstract objects such as vectors, matrices, subspaces and subgroups.

Addition has several important properties. It is commutative, meaning that the order of the operands does not matter, and it is associative, meaning that when one adds more than two numbers, the order in which addition is performed does not matter. Repeated addition of 1 is the same as counting (see Successor function). Addition of 0 does not change a number. Addition also obeys predictable rules concerning related operations such as subtraction and multiplication.

Performing addition is one of the simplest numerical tasks to do. Addition of very small numbers is accessible to toddlers; the most basic task, 1 + 1, can be performed by infants as young as five months, and even some members of other animal species. In primary education, students are taught to add numbers in the decimal system, starting with single digits and progressively tackling more difficult problems. Mechanical aids range from the ancient abacus to the modern computer, where research on the most efficient implementations of addition continues to this day.

| Arithmetic operations | ||||||||||||||||||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||||||||||||||||

Notation and terminology

[edit]

Addition is written using the plus sign "+" between the terms;[3] that is, in infix notation. The result is expressed with an equals sign. For example,

There are also situations where addition is "understood", even though no symbol appears:

- A whole number followed immediately by a fraction indicates the sum of the two, called a mixed number.[4] For example, This notation can cause confusion, since in most other contexts, juxtaposition denotes multiplication instead.[5]

The sum of a series of related numbers can be expressed through capital sigma notation, which compactly denotes iteration. For example,

Terms

[edit]The numbers or the objects to be added in general addition are collectively referred to as the terms,[6] the addends[7][8][9] or the summands;[10] this terminology carries over to the summation of multiple terms. This is to be distinguished from factors, which are multiplied. Some authors call the first addend the augend.[7][8][9] In fact, during the Renaissance, many authors did not consider the first addend an "addend" at all. Today, due to the commutative property of addition, "augend" is rarely used, and both terms are generally called addends.[11]

All of the above terminology derives from Latin. "Addition" and "add" are English words derived from the Latin verb addere, which is in turn a compound of ad "to" and dare "to give", from the Proto-Indo-European root *deh₃- "to give"; thus to add is to give to.[11] Using the gerundive suffix -nd results in "addend", "thing to be added".[a] Likewise from augere "to increase", one gets "augend", "thing to be increased".

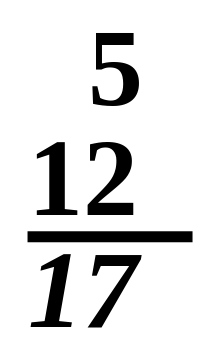

"Sum" and "summand" derive from the Latin noun summa "the highest, the top" and associated verb summare. This is appropriate not only because the sum of two positive numbers is greater than either, but because it was common for the ancient Greeks and Romans to add upward, contrary to the modern practice of adding downward, so that a sum was literally higher than the addends.[13] Addere and summare date back at least to Boethius, if not to earlier Roman writers such as Vitruvius and Frontinus; Boethius also used several other terms for the addition operation. The later Middle English terms "adden" and "adding" were popularized by Chaucer.[14]

The plus sign "+" (Unicode:U+002B; ASCII: +) is an abbreviation of the Latin word et, meaning "and".[15] It appears in mathematical works dating back to at least 1489.[16]

Interpretations

[edit]Addition is used to model many physical processes. Even for the simple case of adding natural numbers, there are many possible interpretations and even more visual representations.

Combining sets

[edit]

Possibly the most basic interpretation of addition lies in combining sets:

- When two or more disjoint collections are combined into a single collection, the number of objects in the single collection is the sum of the numbers of objects in the original collections.

This interpretation is easy to visualize, with little danger of ambiguity. It is also useful in higher mathematics (for the rigorous definition it inspires, see § Natural numbers below). However, it is not obvious how one should extend this version of addition to include fractional numbers or negative numbers.[17]

One possible fix is to consider collections of objects that can be easily divided, such as pies or, still better, segmented rods.[18] Rather than solely combining collections of segments, rods can be joined end-to-end, which illustrates another conception of addition: adding not the rods but the lengths of the rods.

Extending a length

[edit]

A second interpretation of addition comes from extending an initial length by a given length:

- When an original length is extended by a given amount, the final length is the sum of the original length and the length of the extension.[19]

The sum a + b can be interpreted as a binary operation that combines a and b, in an algebraic sense, or it can be interpreted as the addition of b more units to a. Under the latter interpretation, the parts of a sum a + b play asymmetric roles, and the operation a + b is viewed as applying the unary operation +b to a.[20] Instead of calling both a and b addends, it is more appropriate to call a the augend in this case, since a plays a passive role. The unary view is also useful when discussing subtraction, because each unary addition operation has an inverse unary subtraction operation, and vice versa.

Properties

[edit]Commutativity

[edit]

Addition is commutative, meaning that one can change the order of the terms in a sum, but still get the same result. Symbolically, if a and b are any two numbers, then

- a + b = b + a.

The fact that addition is commutative is known as the "commutative law of addition" or "commutative property of addition". Some other binary operations are commutative, such as multiplication, but many others, such as subtraction and division, are not.

Associativity

[edit]

Addition is associative, which means that when three or more numbers are added together, the order of operations does not change the result.

As an example, should the expression a + b + c be defined to mean (a + b) + c or a + (b + c)? Given that addition is associative, the choice of definition is irrelevant. For any three numbers a, b, and c, it is true that (a + b) + c = a + (b + c). For example, (1 + 2) + 3 = 3 + 3 = 6 = 1 + 5 = 1 + (2 + 3).

When addition is used together with other operations, the order of operations becomes important. In the standard order of operations, addition is a lower priority than exponentiation, nth roots, multiplication and division, but is given equal priority to subtraction.[21]

Identity element

[edit]

Adding zero to any number, does not change the number; this means that zero is the identity element for addition, and is also known as the additive identity. In symbols, for every a, one has

- a + 0 = 0 + a = a.

This law was first identified in Brahmagupta's Brahmasphutasiddhanta in 628 AD, although he wrote it as three separate laws, depending on whether a is negative, positive, or zero itself, and he used words rather than algebraic symbols. Later Indian mathematicians refined the concept; around the year 830, Mahavira wrote, "zero becomes the same as what is added to it", corresponding to the unary statement 0 + a = a. In the 12th century, Bhaskara wrote, "In the addition of cipher, or subtraction of it, the quantity, positive or negative, remains the same", corresponding to the unary statement a + 0 = a.[22]

Successor

[edit]Within the context of integers, addition of one also plays a special role: for any integer a, the integer (a + 1) is the least integer greater than a, also known as the successor of a.[23] For instance, 3 is the successor of 2 and 7 is the successor of 6. Because of this succession, the value of a + b can also be seen as the bth successor of a, making addition iterated succession. For example, 6 + 2 is 8, because 8 is the successor of 7, which is the successor of 6, making 8 the 2nd successor of 6.

Units

[edit]To numerically add physical quantities with units, they must be expressed with common units.[24] For example, adding 50 milliliters to 150 milliliters gives 200 milliliters. However, if a measure of 5 feet is extended by 2 inches, the sum is 62 inches, since 60 inches is synonymous with 5 feet. On the other hand, it is usually meaningless to try to add 3 meters and 4 square meters, since those units are incomparable; this sort of consideration is fundamental in dimensional analysis.[25]

Performing addition

[edit]Innate ability

[edit]Studies on mathematical development starting around the 1980s have exploited the phenomenon of habituation: infants look longer at situations that are unexpected.[26] A seminal experiment by Karen Wynn in 1992 involving Mickey Mouse dolls manipulated behind a screen demonstrated that five-month-old infants expect 1 + 1 to be 2, and they are comparatively surprised when a physical situation seems to imply that 1 + 1 is either 1 or 3. This finding has since been affirmed by a variety of laboratories using different methodologies.[27] Another 1992 experiment with older toddlers, between 18 and 35 months, exploited their development of motor control by allowing them to retrieve ping-pong balls from a box; the youngest responded well for small numbers, while older subjects were able to compute sums up to 5.[28]

Even some nonhuman animals show a limited ability to add, particularly primates. In a 1995 experiment imitating Wynn's 1992 result (but using eggplants instead of dolls), rhesus macaque and cottontop tamarin monkeys performed similarly to human infants. More dramatically, after being taught the meanings of the Arabic numerals 0 through 4, one chimpanzee was able to compute the sum of two numerals without further training.[29] More recently, Asian elephants have demonstrated an ability to perform basic arithmetic.[30]

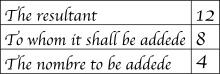

Childhood learning

[edit]Typically, children first master counting. When given a problem that requires that two items and three items be combined, young children model the situation with physical objects, often fingers or a drawing, and then count the total. As they gain experience, they learn or discover the strategy of "counting-on": asked to find two plus three, children count three past two, saying "three, four, five" (usually ticking off fingers), and arriving at five. This strategy seems almost universal; children can easily pick it up from peers or teachers.[31] Most discover it independently. With additional experience, children learn to add more quickly by exploiting the commutativity of addition by counting up from the larger number, in this case, starting with three and counting "four, five." Eventually children begin to recall certain addition facts ("number bonds"), either through experience or rote memorization. Once some facts are committed to memory, children begin to derive unknown facts from known ones. For example, a child asked to add six and seven may know that 6 + 6 = 12 and then reason that 6 + 7 is one more, or 13.[32] Such derived facts can be found very quickly and most elementary school students eventually rely on a mixture of memorized and derived facts to add fluently.[33]

Different nations introduce whole numbers and arithmetic at different ages, with many countries teaching addition in pre-school.[34] However, throughout the world, addition is taught by the end of the first year of elementary school.[35]

Table

[edit]Children are often presented with the addition table of pairs of numbers from 0 to 9 to memorize. Knowing this, children can perform any addition.

| + | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 1 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 2 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 3 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 4 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 5 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 6 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 7 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

| 8 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 9 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 |

Decimal system

[edit]The prerequisite to addition in the decimal system is the fluent recall or derivation of the 100 single-digit "addition facts". One could memorize all the facts by rote, but pattern-based strategies are more enlightening and, for most people, more efficient:[36]

- Commutative property: Mentioned above, using the pattern a + b = b + a reduces the number of "addition facts" from 100 to 55.

- One or two more: Adding 1 or 2 is a basic task, and it can be accomplished through counting on or, ultimately, intuition.[36]

- Zero: Since zero is the additive identity, adding zero is trivial. Nonetheless, in the teaching of arithmetic, some students are introduced to addition as a process that always increases the addends; word problems may help rationalize the "exception" of zero.[36]

- Doubles: Adding a number to itself is related to counting by two and to multiplication. Doubles facts form a backbone for many related facts, and students find them relatively easy to grasp.[36]

- Near-doubles: Sums such as 6 + 7 = 13 can be quickly derived from the doubles fact 6 + 6 = 12 by adding one more, or from 7 + 7 = 14 but subtracting one.[36]

- Five and ten: Sums of the form 5 + x and 10 + x are usually memorized early and can be used for deriving other facts. For example, 6 + 7 = 13 can be derived from 5 + 7 = 12 by adding one more.[36]

- Making ten: An advanced strategy uses 10 as an intermediate for sums involving 8 or 9; for example, 8 + 6 = 8 + 2 + 4 = 10 + 4 = 14.[36]

As students grow older, they commit more facts to memory, and learn to derive other facts rapidly and fluently. Many students never commit all the facts to memory, but can still find any basic fact quickly.[33]

Carry

[edit]The standard algorithm for adding multidigit numbers is to align the addends vertically and add the columns, starting from the ones column on the right. If a column exceeds nine, the extra digit is "carried" into the next column. For example, in the addition 27 + 59

¹ 27 + 59 ———— 86

7 + 9 = 16, and the digit 1 is the carry.[b] An alternate strategy starts adding from the most significant digit on the left; this route makes carrying a little clumsier, but it is faster at getting a rough estimate of the sum. There are many alternative methods.

Since the end of the 20th century, some US programs, including TERC, decided to remove the traditional transfer method from their curriculum.[37] This decision was criticized,[38] which is why some states and counties did not support this experiment.

Decimal fractions

[edit]Decimal fractions can be added by a simple modification of the above process.[39] One aligns two decimal fractions above each other, with the decimal point in the same location. If necessary, one can add trailing zeros to a shorter decimal to make it the same length as the longer decimal. Finally, one performs the same addition process as above, except the decimal point is placed in the answer, exactly where it was placed in the summands.

As an example, 45.1 + 4.34 can be solved as follows:

4 5 . 1 0 + 0 4 . 3 4 ———————————— 4 9 . 4 4

Scientific notation

[edit]In scientific notation, numbers are written in the form , where is the significand and is the exponential part. Addition requires two numbers in scientific notation to be represented using the same exponential part, so that the two significands can simply be added.

For example:

Non-decimal

[edit]Addition in other bases is very similar to decimal addition. As an example, one can consider addition in binary.[40] Adding two single-digit binary numbers is relatively simple, using a form of carrying:

- 0 + 0 → 0

- 0 + 1 → 1

- 1 + 0 → 1

- 1 + 1 → 0, carry 1 (since 1 + 1 = 2 = 0 + (1 × 21))

Adding two "1" digits produces a digit "0", while 1 must be added to the next column. This is similar to what happens in decimal when certain single-digit numbers are added together; if the result equals or exceeds the value of the radix (10), the digit to the left is incremented:

- 5 + 5 → 0, carry 1 (since 5 + 5 = 10 = 0 + (1 × 101))

- 7 + 9 → 6, carry 1 (since 7 + 9 = 16 = 6 + (1 × 101))

This is known as carrying.[41] When the result of an addition exceeds the value of a digit, the procedure is to "carry" the excess amount divided by the radix (that is, 10/10) to the left, adding it to the next positional value. This is correct since the next position has a weight that is higher by a factor equal to the radix. Carrying works the same way in binary:

1 1 1 1 1 (carried digits)

0 1 1 0 1

+ 1 0 1 1 1

—————————————

1 0 0 1 0 0 = 36

In this example, two numerals are being added together: 011012 (1310) and 101112 (2310). The top row shows the carry bits used. Starting in the rightmost column, 1 + 1 = 102. The 1 is carried to the left, and the 0 is written at the bottom of the rightmost column. The second column from the right is added: 1 + 0 + 1 = 102 again; the 1 is carried, and 0 is written at the bottom. The third column: 1 + 1 + 1 = 112. This time, a 1 is carried, and a 1 is written in the bottom row. Proceeding like this gives the final answer 1001002 (3610).

Computers

[edit]

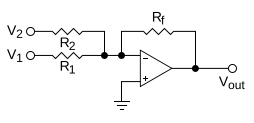

Analog computers work directly with physical quantities, so their addition mechanisms depend on the form of the addends. A mechanical adder might represent two addends as the positions of sliding blocks, in which case they can be added with an averaging lever. If the addends are the rotation speeds of two shafts, they can be added with a differential. A hydraulic adder can add the pressures in two chambers by exploiting Newton's second law to balance forces on an assembly of pistons. The most common situation for a general-purpose analog computer is to add two voltages (referenced to ground); this can be accomplished roughly with a resistor network, but a better design exploits an operational amplifier.[42]

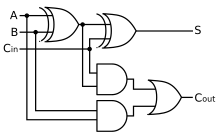

Addition is also fundamental to the operation of digital computers, where the efficiency of addition, in particular the carry mechanism, is an important limitation to overall performance.

The abacus, also called a counting frame, is a calculating tool that was in use centuries before the adoption of the written modern numeral system and is still widely used by merchants, traders and clerks in Asia, Africa, and elsewhere; it dates back to at least 2700–2300 BC, when it was used in Sumer.[43]

Blaise Pascal invented the mechanical calculator in 1642;[44] it was the first operational adding machine. It made use of a gravity-assisted carry mechanism. It was the only operational mechanical calculator in the 17th century[45] and the earliest automatic, digital computer. Pascal's calculator was limited by its carry mechanism, which forced its wheels to only turn one way so it could add. To subtract, the operator had to use the Pascal's calculator's complement, which required as many steps as an addition. Giovanni Poleni followed Pascal, building the second functional mechanical calculator in 1709, a calculating clock made of wood that, once setup, could multiply two numbers automatically.

Adders execute integer addition in electronic digital computers, usually using binary arithmetic. The simplest architecture is the ripple carry adder, which follows the standard multi-digit algorithm. One slight improvement is the carry skip design, again following human intuition; one does not perform all the carries in computing 999 + 1, but one bypasses the group of 9s and skips to the answer.[46]

In practice, computational addition may be achieved via XOR and AND bitwise logical operations in conjunction with bitshift operations as shown in the pseudocode below. Both XOR and AND gates are straightforward to realize in digital logic allowing the realization of full adder circuits which in turn may be combined into more complex logical operations. In modern digital computers, integer addition is typically the fastest arithmetic instruction, yet it has the largest impact on performance, since it underlies all floating-point operations as well as such basic tasks as address generation during memory access and fetching instructions during branching. To increase speed, modern designs calculate digits in parallel; these schemes go by such names as carry select, carry lookahead, and the Ling pseudocarry. Many implementations are, in fact, hybrids of these last three designs.[47][48] Unlike addition on paper, addition on a computer often changes the addends. On the ancient abacus and adding board, both addends are destroyed, leaving only the sum. The influence of the abacus on mathematical thinking was strong enough that early Latin texts often claimed that in the process of adding "a number to a number", both numbers vanish.[49] In modern times, the ADD instruction of a microprocessor often replaces the augend with the sum but preserves the addend.[50] In a high-level programming language, evaluating a + b does not change either a or b; if the goal is to replace a with the sum this must be explicitly requested, typically with the statement a = a + b. Some languages such as C or C++ allow this to be abbreviated as a += b.

// Iterative algorithm

int add(int x, int y) {

int carry = 0;

while (y != 0) {

carry = AND(x, y); // Logical AND

x = XOR(x, y); // Logical XOR

y = carry << 1; // left bitshift carry by one

}

return x;

}

// Recursive algorithm

int add(int x, int y) {

return x if (y == 0) else add(XOR(x, y), AND(x, y) << 1);

}

On a computer, if the result of an addition is too large to store, an arithmetic overflow occurs, resulting in an incorrect answer. Unanticipated arithmetic overflow is a fairly common cause of program errors. Such overflow bugs may be hard to discover and diagnose because they may manifest themselves only for very large input data sets, which are less likely to be used in validation tests.[51] The Year 2000 problem was a series of bugs where overflow errors occurred due to use of a 2-digit format for years.[52]

Addition of numbers

[edit]To prove the usual properties of addition, one must first define addition for the context in question. Addition is first defined on the natural numbers. In set theory, addition is then extended to progressively larger sets that include the natural numbers: the integers, the rational numbers, and the real numbers.[53] (In mathematics education,[54] positive fractions are added before negative numbers are even considered; this is also the historical route.[55])

Natural numbers

[edit]There are two popular ways to define the sum of two natural numbers a and b. If one defines natural numbers to be the cardinalities of finite sets, (the cardinality of a set is the number of elements in the set), then it is appropriate to define their sum as follows:

- Let N(S) be the cardinality of a set S. Take two disjoint sets A and B, with N(A) = a and N(B) = b. Then a + b is defined as .[56]

Here, A ∪ B is the union of A and B. An alternate version of this definition allows A and B to possibly overlap and then takes their disjoint union, a mechanism that allows common elements to be separated out and therefore counted twice.

The other popular definition is recursive:

- Let n+ be the successor of n, that is the number following n in the natural numbers, so 0+ = 1, 1+ = 2. Define a + 0 = a. Define the general sum recursively by a + (b+) = (a + b)+. Hence 1 + 1 = 1 + 0+ = (1 + 0)+ = 1+ = 2.[57]

Again, there are minor variations upon this definition in the literature. Taken literally, the above definition is an application of the recursion theorem on the partially ordered set N2.[58] On the other hand, some sources prefer to use a restricted recursion theorem that applies only to the set of natural numbers. One then considers a to be temporarily "fixed", applies recursion on b to define a function "a +", and pastes these unary operations for all a together to form the full binary operation.[59]

This recursive formulation of addition was developed by Dedekind as early as 1854, and he would expand upon it in the following decades.[60] He proved the associative and commutative properties, among others, through mathematical induction.

Integers

[edit]The simplest conception of an integer is that it consists of an absolute value (which is a natural number) and a sign (generally either positive or negative). The integer zero is a special third case, being neither positive nor negative. The corresponding definition of addition must proceed by cases:

- For an integer n, let |n| be its absolute value. Let a and b be integers. If either a or b is zero, treat it as an identity. If a and b are both positive, define a + b = |a| + |b|. If a and b are both negative, define a + b = −(|a| + |b|). If a and b have different signs, define a + b to be the difference between |a| and |b|, with the sign of the term whose absolute value is larger.[61] As an example, −6 + 4 = −2; because −6 and 4 have different signs, their absolute values are subtracted, and since the absolute value of the negative term is larger, the answer is negative.

Although this definition can be useful for concrete problems, the number of cases to consider complicates proofs unnecessarily. So the following method is commonly used for defining integers. It is based on the remark that every integer is the difference of two natural integers and that two such differences, a – b and c – d are equal if and only if a + d = b + c. So, one can define formally the integers as the equivalence classes of ordered pairs of natural numbers under the equivalence relation

- (a, b) ~ (c, d) if and only if a + d = b + c.

The equivalence class of (a, b) contains either (a – b, 0) if a ≥ b, or (0, b – a) otherwise. If n is a natural number, one can denote +n the equivalence class of (n, 0), and by –n the equivalence class of (0, n). This allows identifying the natural number n with the equivalence class +n.

Addition of ordered pairs is done component-wise:

A straightforward computation shows that the equivalence class of the result depends only on the equivalences classes of the summands, and thus that this defines an addition of equivalence classes, that is integers.[62] Another straightforward computation shows that this addition is the same as the above case definition.

This way of defining integers as equivalence classes of pairs of natural numbers, can be used to embed into a group any commutative semigroup with cancellation property. Here, the semigroup is formed by the natural numbers and the group is the additive group of integers. The rational numbers are constructed similarly, by taking as semigroup the nonzero integers with multiplication.

This construction has been also generalized under the name of Grothendieck group to the case of any commutative semigroup. Without the cancellation property the semigroup homomorphism from the semigroup into the group may be non-injective. Originally, the Grothendieck group was, more specifically, the result of this construction applied to the equivalences classes under isomorphisms of the objects of an abelian category, with the direct sum as semigroup operation.

Rational numbers (fractions)

[edit]Addition of rational numbers can be computed using the least common denominator, but a conceptually simpler definition involves only integer addition and multiplication:

- Define

As an example, the sum .

Addition of fractions is much simpler when the denominators are the same; in this case, one can simply add the numerators while leaving the denominator the same: , so .[63]

The commutativity and associativity of rational addition is an easy consequence of the laws of integer arithmetic.[64] For a more rigorous and general discussion, see field of fractions.

Real numbers

[edit]A common construction of the set of real numbers is the Dedekind completion of the set of rational numbers. A real number is defined to be a Dedekind cut of rationals: a non-empty set of rationals that is closed downward and has no greatest element. The sum of real numbers a and b is defined element by element:

- Define [65]

This definition was first published, in a slightly modified form, by Richard Dedekind in 1872.[66] The commutativity and associativity of real addition are immediate; defining the real number 0 to be the set of negative rationals, it is easily seen to be the additive identity. Probably the trickiest part of this construction pertaining to addition is the definition of additive inverses.[67]

Unfortunately, dealing with multiplication of Dedekind cuts is a time-consuming case-by-case process similar to the addition of signed integers.[68] Another approach is the metric completion of the rational numbers. A real number is essentially defined to be the limit of a Cauchy sequence of rationals, lim an. Addition is defined term by term:

- Define [69]

This definition was first published by Georg Cantor, also in 1872, although his formalism was slightly different.[70] One must prove that this operation is well-defined, dealing with co-Cauchy sequences. Once that task is done, all the properties of real addition follow immediately from the properties of rational numbers. Furthermore, the other arithmetic operations, including multiplication, have straightforward, analogous definitions.[71]

Complex numbers

[edit]

Complex numbers are added by adding the real and imaginary parts of the summands.[72][73] That is to say:

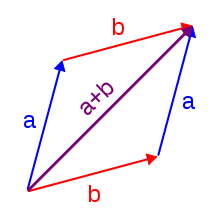

Using the visualization of complex numbers in the complex plane, the addition has the following geometric interpretation: the sum of two complex numbers A and B, interpreted as points of the complex plane, is the point X obtained by building a parallelogram three of whose vertices are O, A and B. Equivalently, X is the point such that the triangles with vertices O, A, B, and X, B, A, are congruent.

Generalizations

[edit]There are many binary operations that can be viewed as generalizations of the addition operation on the real numbers. The field of abstract algebra is centrally concerned with such generalized operations, and they also appear in set theory and category theory.

Abstract algebra

[edit]Vectors

[edit]In linear algebra, a vector space is an algebraic structure that allows for adding any two vectors and for scaling vectors. A familiar vector space is the set of all ordered pairs of real numbers; the ordered pair (a,b) is interpreted as a vector from the origin in the Euclidean plane to the point (a,b) in the plane. The sum of two vectors is obtained by adding their individual coordinates:

This addition operation is central to classical mechanics, in which velocities, accelerations and forces are all represented by vectors.[74]

Matrices

[edit]Matrix addition is defined for two matrices of the same dimensions. The sum of two m × n (pronounced "m by n") matrices A and B, denoted by A + B, is again an m × n matrix computed by adding corresponding elements:[75][76]

For example:

Modular arithmetic

[edit]In modular arithmetic, the set of available numbers is restricted to a finite subset of the integers, and addition "wraps around" when reaching a certain value, called the modulus. For example, the set of integers modulo 12 has twelve elements; it inherits an addition operation from the integers that is central to musical set theory. The set of integers modulo 2 has just two elements; the addition operation it inherits is known in Boolean logic as the "exclusive or" function. A similar "wrap around" operation arises in geometry, where the sum of two angle measures is often taken to be their sum as real numbers modulo 2π. This amounts to an addition operation on the circle, which in turn generalizes to addition operations on many-dimensional tori.

General theory

[edit]The general theory of abstract algebra allows an "addition" operation to be any associative and commutative operation on a set. Basic algebraic structures with such an addition operation include commutative monoids and abelian groups.

Set theory and category theory

[edit]A far-reaching generalization of addition of natural numbers is the addition of ordinal numbers and cardinal numbers in set theory. These give two different generalizations of addition of natural numbers to the transfinite. Unlike most addition operations, addition of ordinal numbers is not commutative.[77] Addition of cardinal numbers, however, is a commutative operation closely related to the disjoint union operation.

In category theory, disjoint union is seen as a particular case of the coproduct operation,[78] and general coproducts are perhaps the most abstract of all the generalizations of addition. Some coproducts, such as direct sum and wedge sum, are named to evoke their connection with addition.

Related operations

[edit]Addition, along with subtraction, multiplication and division, is considered one of the basic operations and is used in elementary arithmetic.

Arithmetic

[edit]Subtraction can be thought of as a kind of addition—that is, the addition of an additive inverse. Subtraction is itself a sort of inverse to addition, in that adding x and subtracting x are inverse functions.

Given a set with an addition operation, one cannot always define a corresponding subtraction operation on that set; the set of natural numbers is a simple example. On the other hand, a subtraction operation uniquely determines an addition operation, an additive inverse operation, and an additive identity; for this reason, an additive group can be described as a set that is closed under subtraction.[79]

Multiplication can be thought of as repeated addition. If a single term x appears in a sum n times, then the sum is the product of n and x. If n is not a natural number, the product may still make sense; for example, multiplication by −1 yields the additive inverse of a number.

In the real and complex numbers, addition and multiplication can be interchanged by the exponential function:[80]

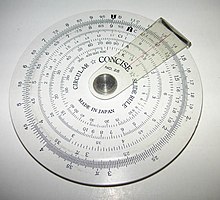

This identity allows multiplication to be carried out by consulting a table of logarithms and computing addition by hand; it also enables multiplication on a slide rule. The formula is still a good first-order approximation in the broad context of Lie groups, where it relates multiplication of infinitesimal group elements with addition of vectors in the associated Lie algebra.[81]

There are even more generalizations of multiplication than addition.[82] In general, multiplication operations always distribute over addition; this requirement is formalized in the definition of a ring. In some contexts, such as the integers, distributivity over addition and the existence of a multiplicative identity is enough to uniquely determine the multiplication operation. The distributive property also provides information about addition; by expanding the product (1 + 1)(a + b) in both ways, one concludes that addition is forced to be commutative. For this reason, ring addition is commutative in general.[83]

Division is an arithmetic operation remotely related to addition. Since a/b = a(b−1), division is right distributive over addition: (a + b) / c = a/c + b/c.[84] However, division is not left distributive over addition; 1 / (2 + 2) is not the same as 1/2 + 1/2.

Ordering

[edit]

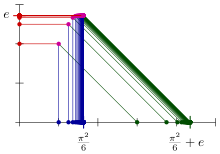

The maximum operation "max (a, b)" is a binary operation similar to addition. In fact, if two nonnegative numbers a and b are of different orders of magnitude, then their sum is approximately equal to their maximum. This approximation is extremely useful in the applications of mathematics, for example in truncating Taylor series. However, it presents a perpetual difficulty in numerical analysis, essentially since "max" is not invertible. If b is much greater than a, then a straightforward calculation of (a + b) − b can accumulate an unacceptable round-off error, perhaps even returning zero. See also Loss of significance.

The approximation becomes exact in a kind of infinite limit; if either a or b is an infinite cardinal number, their cardinal sum is exactly equal to the greater of the two.[86] Accordingly, there is no subtraction operation for infinite cardinals.[87]

Maximization is commutative and associative, like addition. Furthermore, since addition preserves the ordering of real numbers, addition distributes over "max" in the same way that multiplication distributes over addition:

For these reasons, in tropical geometry one replaces multiplication with addition and addition with maximization. In this context, addition is called "tropical multiplication", maximization is called "tropical addition", and the tropical "additive identity" is negative infinity.[88] Some authors prefer to replace addition with minimization; then the additive identity is positive infinity.[89]

Tying these observations together, tropical addition is approximately related to regular addition through the logarithm:

which becomes more accurate as the base of the logarithm increases.[90] The approximation can be made exact by extracting a constant h, named by analogy with the Planck constant from quantum mechanics,[91] and taking the "classical limit" as h tends to zero:

In this sense, the maximum operation is a dequantized version of addition.[92]

Other ways to add

[edit]Incrementation, also known as the successor operation, is the addition of 1 to a number.

Summation describes the addition of arbitrarily many numbers, usually more than just two. It includes the idea of the sum of a single number, which is itself, and the empty sum, which is zero.[93] An infinite summation is a delicate procedure known as a series.[94]

Counting a finite set is equivalent to summing 1 over the set.

Integration is a kind of "summation" over a continuum, or more precisely and generally, over a differentiable manifold. Integration over a zero-dimensional manifold reduces to summation.

Linear combinations combine multiplication and summation; they are sums in which each term has a multiplier, usually a real or complex number. Linear combinations are especially useful in contexts where straightforward addition would violate some normalization rule, such as mixing of strategies in game theory or superposition of states in quantum mechanics.[95]

Convolution is used to add two independent random variables defined by distribution functions. Its usual definition combines integration, subtraction, and multiplication.[96] In general, convolution is useful as a kind of domain-side addition; by contrast, vector addition is a kind of range-side addition.

See also

[edit]- Lunar arithmetic

- Mental arithmetic

- Parallel addition (mathematics)

- Verbal arithmetic (also known as cryptarithms), puzzles involving addition

Notes

[edit]- ^ "Addend" is not a Latin word; in Latin it must be further conjugated, as in numerus addendus "the number to be added".

- ^ Some authors think that "carry" may be inappropriate for education; Van de Walle (p. 211) calls it "obsolete and conceptually misleading", preferring the word "trade". However, "carry" remains the standard term.

Footnotes

[edit]- ^ From Enderton (p. 138): "...select two sets K and L with card K = 2 and card L = 3. Sets of fingers are handy; sets of apples are preferred by textbooks."

- ^ Lewis, Rhys (1974). "Arithmetic". First-Year Technician Mathematics. Palgrave, London: The MacMillan Press Ltd. p. 1. doi:10.1007/978-1-349-02405-6_1. ISBN 978-1-349-02405-6.

- ^ "Addition". www.mathsisfun.com. Retrieved 2020-08-25.

- ^ Devine et al. p. 263

- ^ Mazur, Joseph. Enlightening Symbols: A Short History of Mathematical Notation and Its Hidden Powers. Princeton University Press, 2014. p. 161

- ^ Department of the Army (1961) Army Technical Manual TM 11-684: Principles and Applications of Mathematics for Communications-Electronics . Section 5.1

- ^ a b Shmerko, V.P.; Yanushkevich [Ânuškevič], Svetlana N. [Svitlana N.]; Lyshevski, S.E. (2009). Computer arithmetics for nanoelectronics. CRC Press. p. 80.

- ^ a b Schmid, Hermann (1974). Decimal Computation (1st ed.). Binghamton, NY: John Wiley & Sons. ISBN 0-471-76180-X. and Schmid, Hermann (1983) [1974]. Decimal Computation (reprint of 1st ed.). Malabar, FL: Robert E. Krieger Publishing Company. ISBN 978-0-89874-318-0.

- ^ a b Weisstein, Eric W. "Addition". mathworld.wolfram.com. Retrieved 2020-08-25.

- ^ Hosch, W.L. (Ed.). (2010). The Britannica Guide to Numbers and Measurement. The Rosen Publishing Group. p. 38

- ^ a b Schwartzman p. 19

- ^ Karpinski pp. 56–57, reproduced on p. 104

- ^ Schwartzman (p. 212) attributes adding upwards to the Greeks and Romans, saying it was about as common as adding downwards. On the other hand, Karpinski (p. 103) writes that Leonard of Pisa "introduces the novelty of writing the sum above the addends"; it is unclear whether Karpinski is claiming this as an original invention or simply the introduction of the practice to Europe.

- ^ Karpinski pp. 150–153

- ^ Cajori, Florian (1928). "Origin and meanings of the signs + and -". A History of Mathematical Notations, Vol. 1. The Open Court Company, Publishers.

- ^ "plus". Oxford English Dictionary (Online ed.). Oxford University Press. (Subscription or participating institution membership required.)

- ^ See Viro 2001 for an example of the sophistication involved in adding with sets of "fractional cardinality".

- ^ Adding it up (p. 73) compares adding measuring rods to adding sets of cats: "For example, inches can be subdivided into parts, which are hard to tell from the wholes, except that they are shorter; whereas it is painful to cats to divide them into parts, and it seriously changes their nature."

- ^ Mosley, F (2001). Using number lines with 5–8 year olds. Nelson Thornes. p. 8

- ^ Li, Y., & Lappan, G. (2014). Mathematics curriculum in school education. Springer. p. 204

- ^ Bronstein, Ilja Nikolaevič; Semendjajew, Konstantin Adolfovič (1987) [1945]. "2.4.1.1.". In Grosche, Günter; Ziegler, Viktor; Ziegler, Dorothea (eds.). Taschenbuch der Mathematik (in German). Vol. 1. Translated by Ziegler, Viktor. Weiß, Jürgen (23 ed.). Thun and Frankfurt am Main: Verlag Harri Deutsch (and B.G. Teubner Verlagsgesellschaft, Leipzig). pp. 115–120. ISBN 978-3-87144-492-0.

- ^ Kaplan pp. 69–71

- ^ Hempel, C.G. (2001). The philosophy of Carl G. Hempel: studies in science, explanation, and rationality. p. 7

- ^ R. Fierro (2012) Mathematics for Elementary School Teachers. Cengage Learning. Sec 2.3

- ^ Moebs, William; et al. (2022). "1.4 Dimensional Analysis". University Physics Volume 1. OpenStax. ISBN 978-1-947172-20-3.

- ^ Wynn p. 5

- ^ Wynn p. 15

- ^ Wynn p. 17

- ^ Wynn p. 19

- ^ Randerson, James (21 August 2008). "Elephants have a head for figures". The Guardian. Archived from the original on 2 April 2015. Retrieved 29 March 2015.

- ^ F. Smith p. 130

- ^ Carpenter, Thomas; Fennema, Elizabeth; Franke, Megan Loef; Levi, Linda; Empson, Susan (1999). Children's mathematics: Cognitively guided instruction. Portsmouth, NH: Heinemann. ISBN 978-0-325-00137-1.

- ^ a b Henry, Valerie J.; Brown, Richard S. (2008). "First-grade basic facts: An investigation into teaching and learning of an accelerated, high-demand memorization standard". Journal for Research in Mathematics Education. 39 (2): 153–183. doi:10.2307/30034895. JSTOR 30034895.

- ^ Beckmann, S. (2014). The twenty-third ICMI study: primary mathematics study on whole numbers. International Journal of STEM Education, 1(1), 1-8. Chicago

- ^ Schmidt, W., Houang, R., & Cogan, L. (2002). "A coherent curriculum". American Educator, 26(2), 1–18.

- ^ a b c d e f g Fosnot and Dolk p. 99

- ^ "Vertical addition and subtraction strategy". primarylearning.org. Retrieved April 20, 2022.

- ^ "Reviews of TERC: Investigations in Number, Data, and Space". nychold.com. Retrieved April 20, 2022.

- ^ Rebecca Wingard-Nelson (2014) Decimals and Fractions: It's Easy Enslow Publishers, Inc.

- ^ Dale R. Patrick, Stephen W. Fardo, Vigyan Chandra (2008) Electronic Digital System Fundamentals The Fairmont Press, Inc. p. 155

- ^ P.E. Bates Bothman (1837) The common school arithmetic. Henry Benton. p. 31

- ^ Truitt and Rogers pp. 1;44–49 and pp. 2;77–78

- ^ Ifrah, Georges (2001). The Universal History of Computing: From the Abacus to the Quantum Computer. New York: John Wiley & Sons, Inc. ISBN 978-0-471-39671-0. p. 11

- ^ Jean Marguin, p. 48 (1994); Quoting René Taton (1963)

- ^ See Competing designs in Pascal's calculator article

- ^ Flynn and Overman pp. 2, 8

- ^ Flynn and Overman pp. 1–9

- ^ Yeo, Sang-Soo, et al., eds. Algorithms and Architectures for Parallel Processing: 10th International Conference, ICA3PP 2010, Busan, Korea, May 21–23, 2010. Proceedings. Vol. 1. Springer, 2010. p. 194

- ^ Karpinski pp. 102–103

- ^ The identity of the augend and addend varies with architecture. For ADD in x86 see Horowitz and Hill p. 679; for ADD in 68k see p. 767.

- ^ Joshua Bloch, "Extra, Extra – Read All About It: Nearly All Binary Searches and Mergesorts are Broken" Archived 2016-04-01 at the Wayback Machine. Official Google Research Blog, June 2, 2006.

- ^ Neumann, Peter G. (2 February 1987). "The Risks Digest Volume 4: Issue 45". The Risks Digest. 4 (45). Archived from the original on 2014-12-28. Retrieved 2015-03-30.

- ^ Enderton chapters 4 and 5, for example, follow this development.

- ^ According to a survey of the nations with highest TIMSS mathematics test scores; see Schmidt, W., Houang, R., & Cogan, L. (2002). A coherent curriculum. American educator, 26(2), p. 4.

- ^ Baez (p. 37) explains the historical development, in "stark contrast" with the set theory presentation: "Apparently, half an apple is easier to understand than a negative apple!"

- ^ Begle p. 49, Johnson p. 120, Devine et al. p. 75

- ^ Enderton p. 79

- ^ For a version that applies to any poset with the descending chain condition, see Bergman p. 100.

- ^ Enderton (p. 79) observes, "But we want one binary operation +, not all these little one-place functions."

- ^ Ferreirós p. 223

- ^ K. Smith p. 234, Sparks and Rees p. 66

- ^ Enderton p. 92

- ^ Schyrlet Cameron, and Carolyn Craig (2013)Adding and Subtracting Fractions, Grades 5–8 Mark Twain, Inc.

- ^ The verifications are carried out in Enderton p. 104 and sketched for a general field of fractions over a commutative ring in Dummit and Foote p. 263.

- ^ Enderton p. 114

- ^ Ferreirós p. 135; see section 6 of Stetigkeit und irrationale Zahlen Archived 2005-10-31 at the Wayback Machine.

- ^ The intuitive approach, inverting every element of a cut and taking its complement, works only for irrational numbers; see Enderton p. 117 for details.

- ^ Schubert, E. Thomas, Phillip J. Windley, and James Alves-Foss. "Higher Order Logic Theorem Proving and Its Applications: Proceedings of the 8th International Workshop, volume 971 of." Lecture Notes in Computer Science (1995).

- ^ Textbook constructions are usually not so cavalier with the "lim" symbol; see Burrill (p. 138) for a more careful, drawn-out development of addition with Cauchy sequences.

- ^ Ferreirós p. 128

- ^ Burrill p. 140

- ^ Conway, John B. (1986), Functions of One Complex Variable I, Springer, ISBN 978-0-387-90328-6

- ^ Joshi, Kapil D (1989), Foundations of Discrete Mathematics, New York: John Wiley & Sons, ISBN 978-0-470-21152-6

- ^ Gbur, p. 1

- ^ Lipschutz, S., & Lipson, M. (2001). Schaum's outline of theory and problems of linear algebra. Erlangga.

- ^ Riley, K.F.; Hobson, M.P.; Bence, S.J. (2010). Mathematical methods for physics and engineering. Cambridge University Press. ISBN 978-0-521-86153-3.

- ^ Cheng, pp. 124–132

- ^ Riehl, p. 100

- ^ The set still must be nonempty. Dummit and Foote (p. 48) discuss this criterion written multiplicatively.

- ^ Rudin p. 178

- ^ Lee p. 526, Proposition 20.9

- ^ Linderholm (p. 49) observes, "By multiplication, properly speaking, a mathematician may mean practically anything. By addition he may mean a great variety of things, but not so great a variety as he will mean by 'multiplication'."

- ^ Dummit and Foote p. 224. For this argument to work, one still must assume that addition is a group operation and that multiplication has an identity.

- ^ For an example of left and right distributivity, see Loday, especially p. 15.

- ^ Compare Viro Figure 1 (p. 2)

- ^ Enderton calls this statement the "Absorption Law of Cardinal Arithmetic"; it depends on the comparability of cardinals and therefore on the Axiom of Choice.

- ^ Enderton p. 164

- ^ Mikhalkin p. 1

- ^ Akian et al. p. 4

- ^ Mikhalkin p. 2

- ^ Litvinov et al. p. 3

- ^ Viro p. 4

- ^ Martin p. 49

- ^ Stewart p. 8

- ^ Rieffel and Polak, p. 16

- ^ Gbur, p. 300

References

[edit]- History

- Ferreirós, José (1999). Labyrinth of Thought: A History of Set Theory and Its Role in Modern Mathematics. Birkhäuser. ISBN 978-0-8176-5749-9.

- Karpinski, Louis (1925). The History of Arithmetic. Rand McNally. LCC QA21.K3.

- Schwartzman, Steven (1994). The Words of Mathematics: An Etymological Dictionary of Mathematical Terms Used in English. MAA. ISBN 978-0-88385-511-9.

- Williams, Michael (1985). A History of Computing Technology. Prentice-Hall. ISBN 978-0-13-389917-7.

- Elementary mathematics

- Sparks, F.; Rees C. (1979). A Survey of Basic Mathematics. McGraw-Hill. ISBN 978-0-07-059902-4.

- Education

- Begle, Edward (1975). The Mathematics of the Elementary School. McGraw-Hill. ISBN 978-0-07-004325-1.

- California State Board of Education mathematics content standards Adopted December 1997, accessed December 2005.

- Devine, D.; Olson, J.; Olson, M. (1991). Elementary Mathematics for Teachers (2e ed.). Wiley. ISBN 978-0-471-85947-5.

- National Research Council (2001). Adding It Up: Helping Children Learn Mathematics. National Academy Press. doi:10.17226/9822. ISBN 978-0-309-06995-3.

- Van de Walle, John (2004). Elementary and Middle School Mathematics: Teaching developmentally (5e ed.). Pearson. ISBN 978-0-205-38689-5.

- Cognitive science

- Fosnot, Catherine T.; Dolk, Maarten (2001). Young Mathematicians at Work: Constructing Number Sense, Addition, and Subtraction. Heinemann. ISBN 978-0-325-00353-5.

- Wynn, Karen (1998). "Numerical competence in infants". The Development of Mathematical Skills. Taylor & Francis. ISBN 0-86377-816-X.

- Mathematical exposition

- Bogomolny, Alexander (1996). "Addition". Interactive Mathematics Miscellany and Puzzles (cut-the-knot.org). Archived from the original on April 26, 2006. Retrieved 3 February 2006.

- Cheng, Eugenia (2017). Beyond Infinity: An Expedition to the Outer Limits of Mathematics. Basic Books. ISBN 978-1-541-64413-7.

- Dunham, William (1994). The Mathematical Universe. Wiley. ISBN 978-0-471-53656-7.

- Johnson, Paul (1975). From Sticks and Stones: Personal Adventures in Mathematics. Science Research Associates. ISBN 978-0-574-19115-1.

- Linderholm, Carl (1971). Mathematics Made Difficult. Wolfe. ISBN 978-0-7234-0415-6.

- Smith, Frank (2002). The Glass Wall: Why Mathematics Can Seem Difficult. Teachers College Press. ISBN 978-0-8077-4242-6.

- Smith, Karl (1980). The Nature of Modern Mathematics (3rd ed.). Wadsworth. ISBN 978-0-8185-0352-8.

- Advanced mathematics

- Bergman, George (2005). An Invitation to General Algebra and Universal Constructions (2.3 ed.). General Printing. ISBN 978-0-9655211-4-7.

- Burrill, Claude (1967). Foundations of Real Numbers. McGraw-Hill. LCC QA248.B95.

- Dummit, D.; Foote, R. (1999). Abstract Algebra (2 ed.). Wiley. ISBN 978-0-471-36857-1.

- Gbur, Greg (2011). Mathematical Methods for Optical Physics and Engineering. Cambridge University Press. ISBN 978-0-511-91510-9. OCLC 704518582.

- Enderton, Herbert (1977). Elements of Set Theory. Academic Press. ISBN 978-0-12-238440-0.

- Lee, John (2003). Introduction to Smooth Manifolds. Springer. ISBN 978-0-387-95448-6.

- Martin, John (2003). Introduction to Languages and the Theory of Computation (3 ed.). McGraw-Hill. ISBN 978-0-07-232200-2.

- Riehl, Emily (2016). Category Theory in Context. Dover. ISBN 978-0-486-80903-8.

- Rudin, Walter (1976). Principles of Mathematical Analysis (3 ed.). McGraw-Hill. ISBN 978-0-07-054235-8.

- Stewart, James (1999). Calculus: Early Transcendentals (4 ed.). Brooks/Cole. ISBN 978-0-534-36298-0.

- Mathematical research

- Akian, Marianne; Bapat, Ravindra; Gaubert, Stephane (2005). "Min-plus methods in eigenvalue perturbation theory and generalised Lidskii-Vishik-Ljusternik theorem". INRIA Reports. arXiv:math.SP/0402090. Bibcode:2004math......2090A.

- Baez, J.; Dolan, J. (2001). Mathematics Unlimited – 2001 and Beyond. From Finite Sets to Feynman Diagrams. p. 29. arXiv:math.QA/0004133. ISBN 3-540-66913-2.

- Litvinov, Grigory; Maslov, Victor; Sobolevskii, Andreii (1999). Idempotent mathematics and interval analysis. Reliable Computing, Kluwer.

- Loday, Jean-Louis (2002). "Arithmetree". Journal of Algebra. 258: 275. arXiv:math/0112034. doi:10.1016/S0021-8693(02)00510-0.

- Mikhalkin, Grigory (2006). Sanz-Solé, Marta (ed.). Proceedings of the International Congress of Mathematicians (ICM), Madrid, Spain, August 22–30, 2006. Volume II: Invited lectures. Tropical Geometry and its Applications. Zürich: European Mathematical Society. pp. 827–852. arXiv:math.AG/0601041. ISBN 978-3-03719-022-7. Zbl 1103.14034.

- Viro, Oleg (2001). Cascuberta, Carles; Miró-Roig, Rosa Maria; Verdera, Joan; Xambó-Descamps, Sebastià (eds.). European Congress of Mathematics: Barcelona, July 10–14, 2000, Volume I. Dequantization of Real Algebraic Geometry on Logarithmic Paper. Progress in Mathematics. Vol. 201. Basel: Birkhäuser. pp. 135–146. arXiv:math/0005163. Bibcode:2000math......5163V. ISBN 978-3-7643-6417-5. Zbl 1024.14026.

- Computing

- Flynn, M.; Oberman, S. (2001). Advanced Computer Arithmetic Design. Wiley. ISBN 978-0-471-41209-0.

- Horowitz, P.; Hill, W. (2001). The Art of Electronics (2 ed.). Cambridge UP. ISBN 978-0-521-37095-0.

- Jackson, Albert (1960). Analog Computation. McGraw-Hill. LCC QA76.4 J3.

- Rieffel, Eleanor G.; Polak, Wolfgang H. (4 March 2011). Quantum Computing: A Gentle Introduction. MIT Press. ISBN 978-0-262-01506-6.

- Truitt, T.; Rogers, A. (1960). Basics of Analog Computers. John F. Rider. LCC QA76.4 T7.

- Marguin, Jean (1994). Histoire des Instruments et Machines à Calculer, Trois Siècles de Mécanique Pensante 1642–1942 (in French). Hermann. ISBN 978-2-7056-6166-3.

- Taton, René (1963). Le Calcul Mécanique. Que Sais-Je ? n° 367 (in French). Presses universitaires de France. pp. 20–28.

Further reading

[edit]- Baroody, Arthur; Tiilikainen, Sirpa (2003). The Development of Arithmetic Concepts and Skills. Two perspectives on addition development. Routledge. p. 75. ISBN 0-8058-3155-X.

- Davison, David M.; Landau, Marsha S.; McCracken, Leah; Thompson, Linda (1999). Mathematics: Explorations & Applications (TE ed.). Prentice Hall. ISBN 978-0-13-435817-8.

- Bunt, Lucas N.H.; Jones, Phillip S.; Bedient, Jack D. (1976). The Historical roots of Elementary Mathematics. Prentice-Hall. ISBN 978-0-13-389015-0.

- Poonen, Bjorn (2010). "Addition". Girls' Angle Bulletin. 3 (3–5). ISSN 2151-5743.

- Weaver, J. Fred (1982). "Addition and Subtraction: A Cognitive Perspective". Addition and Subtraction: A Cognitive Perspective. Interpretations of Number Operations and Symbolic Representations of Addition and Subtraction. Taylor & Francis. p. 60. ISBN 0-89859-171-6.

![{\displaystyle \scriptstyle \left.{\begin{matrix}\scriptstyle {\frac {\scriptstyle {\text{dividend}}}{\scriptstyle {\text{divisor}}}}\\[1ex]\scriptstyle {\frac {\scriptstyle {\text{numerator}}}{\scriptstyle {\text{denominator}}}}\end{matrix}}\right\}\,=\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5d5d22ff59234f0d437be740306e8dd905991e1e)

![{\displaystyle \scriptstyle {\sqrt[{\text{degree}}]{\scriptstyle {\text{radicand}}}}\,=\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5582d567e7e7fbcdb728291770905e09beb0ea18)